Hey, have you heard? Music is getting worse! They sent a bunch of musicologists out and had them count chord complexity in songs and, yep, it's gone down a whole bunch!

Except, not really. It's really only pop music that has been getting dumber. Every other genre of music has trended towards more complexity, not less. Which begs the question - what the hell is "pop music", anyway? It's short for "popular"; but this is less a matter of conscious decision to listen and moreso the way that music is marketed. You see, if you're really "into" music, you're listening to niche genres, which you have to actively seek out and find. The mainstream is just what is given to you if you don't really have a taste in music.

This niche/mainstream split is everywhere and every medium wants to split this way. Music already has pop. Movie studios have "tentpole" films that "hold up" the tent for smaller productions to take risks. TV can be divided into complex dramas and brain-smoothing mush. Video games have well-made indie works and Fortnite. YouTube has some of the most in-depth and thought-provoking video essays right next to SEO spam, reuploaded garbage, and obvious scams.

But why does the mainstream decay like this?

Words Are Cool, But Coercive Book Monopolies Are Cooler

Let me put you into the shoes of a major publisher of books. Your job is to fund the production of new books to be sold into the market. You make money when you sell a copy of your book to a reader; so you want to maximize the number of readers. This means you want a work that can sell into the mainstream - one that can be pushed on people who don't read very many books.

Well, how do you do that?

Look at whatever's currently at the top of the bestseller lists, and Copy Exactly!

Ok, well, you can't really do that, because we have copyright law. But you can't copyright a plot twist, an archetype, or a concept - just very specific arrangements of such. And so, books are made to resemble what is already made, as a sort of cargo cult of writing. Think about how many English-language YA books are reusing tropes established by the original Harry Potter, like having a society where personality is a caste system. Or how many Japanese-language light novels have RPG mechanics be a part of the plot partially because that genre of writing grew out of retellings of D&D sessions. Trends chase their tails forever in the mainstream, forming a closed loop.

There's another way to get at the top of the charts, though: actually be a good book and have a wide appeal for multiple demographics. This is the mythical "execution" that executives like to talk about in the same way one might talk about the whims of God. Despite how much trend-chasing happens, there are still obstinate creators writing novel works that enter the mainstream and change what those trends are. Humans stubbornly refuse to conform their art to statistics.

But this is the 2020s; why not just self-publish?

Well... now instead of publishers making or breaking your work, you have platforms looking to squeeze you dry. Platforms enable all sorts of abuses, because they have no incentive to see your particular book doing well. They just want books to sell, and they want a lot of books to sell - more than any one customer could possible search through. So they employ lots of compute power and machine learning to try and pair books to customers based on relatively superficial metadata like purchase patterns or keywords.

The Death Of The Artist

The year is 20XX. Everyone creates AI movies with superhuman levels of perfection. Because of this, the winner of the Oscars depends solely on release date. The marketing metagame has evolved to ridiculous levels due to it being the only remaining factor to decide nominations.

Or at least that's what the hype around generative art is. The reality of generative art is a lot of prompt engineering and fiddling to get the machine to conform to your vision. Remember: machine learning does not actually comprehend your prompt in the way that you'd expect. It just has statistical patterns between words and images that let it compress a list of up to 77 words into 512 sliders that correspond to various image features. Those sliders are then fed into an image denoiser alongside random junk data that it iterates upon until it draws something akin to the statistical distribution of the data that the denoiser was trained on.

This is an absolutely fantastic set of statistical tools, but it is not a complete package that turns a talentless hack into the next Banksy. What it's doing is producing images that are "like" the training set data that it put in. That's why prompt engineering works - machine learning associated the tokens "trending on artstation" with "good art" in the same way it associates "oil on canvas" with brushwork, portrait lighting, or historical clothing. This is, of course, an engine for unlimited algorithmic trend-chasing: whatever was popular at the time that LAION-5B was crawled will also be what art generator likes to draw, because that's what it saw the most.

So a lot of AI art looks... same-y. Because it learned to copy the same trends that were already being chased by everyone else. If you want your AI art to look distinct, you are fighting an uphill battle. Machine learning stubbornly refuses to conform it statistics to art. And you can see this in the hype cycle of art generators. At first, it feels like a Magic Crayon - a device that dramatically increases your expressive power. Then people notice when it fails - notably, AI is worse at drawing hands than I am - and start to realize when they're being thrown generated art. And even when the art isn't deliberately wrong, it can be subtly obvious that it's been generated by a machine - simply by the things that its good at being used more often and thus being less valued.

When you train any ML system, you are constantly at the mercy of the dataset. Specifically, the more data you have, the better the model does its task. This is known as the Scaling Laws; ML always does better if you throw more data, compute, and parameters at the problem. LAION-5B, which I mentioned earlier, is entirely scraped public images from the entire Internet. Ignoring the ethical problems (even if it does wind up being legal), this has a second problem: generated images from prior models being fed back into the data set to train the next set of models.

How would this happen? Simple: people abusing the model for spam. You see, while a human has to spend time and effort to write or draw something, a trained model can just churn out endless reams of statistically-conformant nonsense. This is a dream for people who want to produce spam. And people are already producing low-effort "spam" books, even selling bogus courses on how to abuse platforms to sell spam books to people. Google already considers generated art to be an abusive spam technique.

Existing ML systems had the luxury of surprise. GPT-3 could train on a dataset collected before human-convincing text generation. GPT-2 did exist, but nobody would try to fill a website up with it to fool Google. Same with U-Net based DALL-E, Imagen, Midjourney, and Stable Diffusion. The state-of-the-art before them were GANs, which at best can do convincing faces atop really weird backgrounds.

Nice Meme

The following anecdote arguably contains spoilers for Jojo's Bizarre Adventure: Diamond is Unbreakable.

So, a while back when text-to-image art generators were being treated like nuclear reactor technology, someone on Huggingface trained their own art generator and called it DALL-E Mini. This was the first generator that the average user could actually use, and so people did, including me.

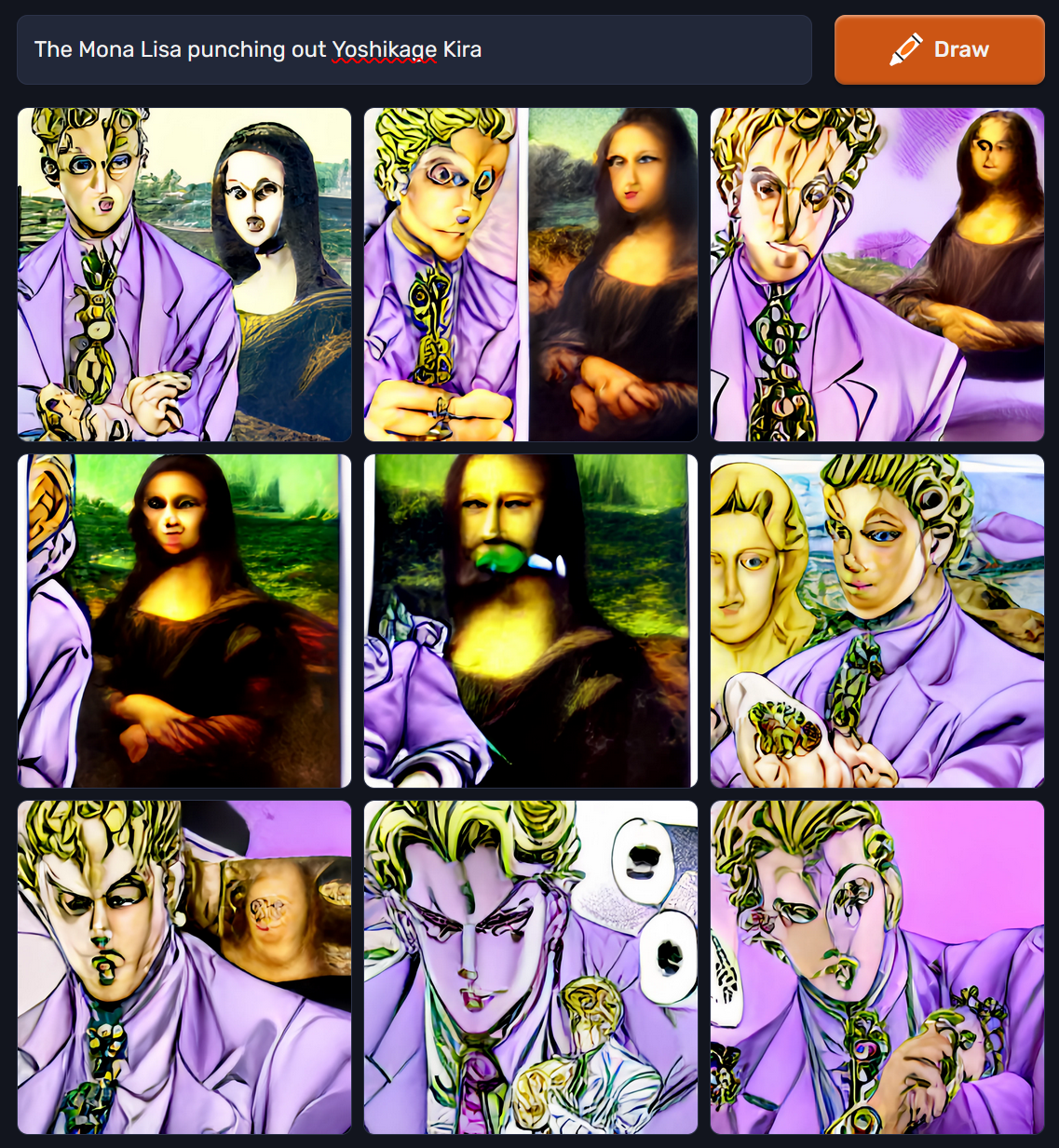

It was fairly trivial and entertaining to get it to spit out fanart of copyrighted characters in odd styles, crossovers, or funny poses. In fact, I'm pretty sure a lot of people are using art generators just to spit out fan art, albeit for way more, uh... salacious purposes. One of my ideas was very simple: "The Mona Lisa punching out Yoshikage Kira". Y'see, it'd be funny, because the antagonist of Part 4 talks about...

Oh, sorry, you don't really care about my stupid cringe meme edits. Got it. Well hold on just a second and take a look at the result:

I had to actually regenerate this, but I don't think they've retrained Craiyon since I first tried it earlier this year

As you can see, it did not actually draw the action requested in the prompt. It just gave me a few bad photobashes. And as I'd learn playing around with the system, there were plenty of other particular key words that would just automatically give me certain Famous Images instead of the actual result.

This is because of overfitting. What ML training actually is, is a process of adjusting the millions of knobs on your neural network to make the model more likely to generate a desired output - in this case, the noise off a slightly noisy duplicate of a training set image. And every time you do this with the same input, the model continues to trend towards that output. If you do it enough times, the model stops generalizing and just memorizes the output.

Of course, most ML researchers know about this, and set their training epochs low enough and feed enough data into it that overfitting shouldn't happen. But that's assuming that every image in the training set is unique. Famous Images break this assumption because they will be on many websites, and get compressed, cropped or scaled in different ways that will break simple image similarity detection algorithms. And of course the keywords I used in the prompt correspond to two very Famous Images: one is basically used as a sort of memetic verbal shorthand, and the other is a painting by Leonardo da Vinci.

Inverse Scaling Is Coming

I'd like to postulate the existence of a new kind of Famous Image: art generator output itself.

Of course, this isn't a single image, but the statistical distribution of images commonly generated by the sum total of competent image generators. They won't overfit in the same way that a single image can. But they will anchor future models around a specific set of trends. Since real artists will be outpaced by art generators and their output, machine learning will just be learning from itself, for the sake of itself, and it will produce whatever it has seen before.

The Internet will become a market for lemons, and because machine learning is a reflection of what's on the Internet...

It's a bold claim, but I would not be surprised if we start seeing inverse scaling. Or at least an exhaustion of data scalability. We obviously can continue on with our existing LAION-5B trained models, but we won't be able to make anything better simply by stealing more art unless we get proper "AGI" (as in, what AI was supposed to be), or at least ways to filter out prior models' output from new models.

On the other hand, it's not like prior waves of trend-chasing killed new art. But it did make them harder to find. Artists trying to create new work are already swimming upstream.